Apple is today announcing a trio of new efforts it’s undertaking to bring new protection for children to iPhone, iPad, and Mac. This includes new communications safety features in Messages, enhanced detection of Child Sexual Abuse Material (CSAM) content in iCloud, and updated knowledge information for Siri and Search.

One thing Apple is emphasizing is that its new program is ambitious, but that “protecting children is an important responsibility.” With that in mind, Apple says that its efforts will “evolve and expand over time.”

Messages

The first announcement today is a new communication safety feature in the Messages app. Apple explains that when a child who is in an iCloud Family receives or attempts to send sexually explicit photos, the child will see a warning message.

Apple explains that when a child receives a sexually explicit image, the image will be blurred and the Messages app will display a warning saying the image “may be sensitive.” If the child taps “View photo,” they’ll see a pop-up message that informs them why the image is considered sensitive.

The pop-up explains that if the child decides to view the image, their iCloud Family parent will receive a notification “to make sure you’re OK.” The pop-up will also include a quick link to receive additional help.

Additionally, if a child attempts to send an image that is sexually explicit, they will see a similar warning. Apple says the child will be warned before the photo is sent and the parents can receive a message if the child chooses to send it, for kids under the age of 13.

Apple further explains that Messages uses on-device machine learning to analyze image attachments and make the determination if a photo is sexually explicit. iMessage remains end-to-end encrypted and Apple does not gain access to any of the messages. The feature will also be opt-in.

Apple says that this feature is coming “later this year to accounts set up as families in iCloud” in updates to iOS 15, iPadOS 15, and macOS Monterey. The feature will be available in the US to start.

CSAM detection

Second, and perhaps most notably, Apple is announcing new steps to combat the spread of Child Sexual Abuse Material, or CSAM. Apple explains that CSAM refers to content that depicts sexually explicit activities involving a child.

This feature, which leaked in part earlier today, will allow Apple to detect known CSAM images when they are stored in iCloud Photos. Apple can then report instances of CSAM to the National Center for Missing and Exploited Children, an entity that acts as a comprehensive reporting agency for CSAM and works closely with law enforcement.

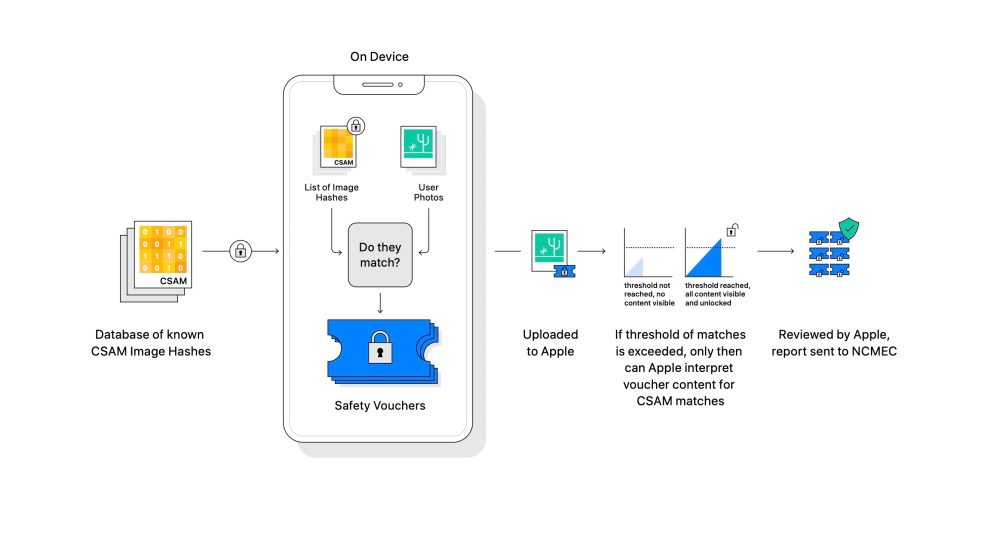

Apple repeatedly underscores that its method of detecting CSAM is designed with user privacy in mind. In plain English, Apple is essentially analyzing the images on your device to see if there are any matches against a database of known CSAM images provided by the National Center for Missing and Exploited Children. All of the matching is done on device, with Apple transforming the database from the National Center for Missing and Exploited Children into an “unreadable set of hashes that is securely stored on users’ devices.”

Apple explains:

Before an image is stored in iCloud Photos, an on-device matching process is performed for that image against the unreadable set of known CSAM hashes. This matching process is powered by a cryptographic technology called private set intersection, which determines if there is a match without revealing the result. Private set intersection (PSI) allows Apple to learn if an image hash matches the known CSAM image hashes, without learning anything about image hashes that do not match. PSI also prevents the user from learning whether there was a match.

If there is an on-device match, the device then creates a cryptographic safety voucher that encodes the match result. A technology called threshold secret sharing is then employed. This ensures the contents of the safety vouchers cannot be interpreted by Apple unless the iCloud Photos account crosses a threshold of known CSAM content.

“For example, if a secret is split into 1,000 shares, and the threshold is 10, then the secret can be reconstructed from any 10 of the 1,000 shares. However, if only nine shares are available, then nothing is revealed about the secret,” Apple says.

Apple isn’t disclosing the specific threshold it will use — that is, the number of CSAM matches required before it is able to interpret the contents of the safety vouchers. Once that threshold is reached, however, Apple will manually review the report to confirm the match, then disable the user’s account, and sent a report to the National Center for Missing and Exploited Children.

The threshold technology is important because it means accounts are not incorrectly flagged. The manual review also adds another step of confirm to prevent mistakes, and users will be able to file an appeal to have their account reinstated. Apple says the system has a low error rate of less than 1 in 1 trillion account per year.

Even though everything is done on-device, Apple only analyzes pictures that are stored in iCloud Photos. Images stored entirely locally are not involved in this process. Apple says the on-device system is important and more privacy preserving than cloud-based scanning because it only reports users who have CSAM images, as opposed to scanning everyone’s photos constantly in the cloud.

Apple’s implementation of this feature is highly technical, and more details can be learned at the links below.

Apple says the feature will come first to the United States but it hopes to expand elsewhere eventually.

Siri and Search

Finally, Apple is making upgrades to Siri and Search:

Apple is also expanding guidance in Siri and Search by providing additional resources to help children and parents stay safe online and get help with unsafe situations. For example, users who ask Siri how they can report CSAM or child exploitation will be pointed to resources for where and how to file a report.

Siri and Search are also being updated to intervene when users perform searches for queries related to CSAM. These interventions will explain to users that interest in this topic is harmful and problematic, and provide resources from partners to get help with this issue.

The updates to Siri and Search are coming later this year in an update to iOS 15, iPadOS 15, watchOS 8, and macOS Monterey.

Testimonials

John Clark, President & CEO, National Center for Missing & Exploited Children: “Apple’s expanded protection for children is a game changer. With so many people using Apple products, these new safety measures have lifesaving potential for children who are being enticed online and whose horrific images are being circulated in child sexual abuse material. At the National Center for Missing & Exploited Children we know this crime can only be combated if we are steadfast in our dedication to protecting children. We can only do this because technology partners, like Apple, step up and make their dedication known. The reality is that privacy and child protection can co-exist. We applaud Apple and look forward to working together to make this world a safer place for children.”

Julie Cordua, CEO, Thorn: “At Thorn we believe in the right to online privacy, including for children whose sexual abuse is recorded and distributed across the internet without consent. The commitment from Apple to deploy technology solutions that balance the need for privacy with digital safety for children brings us a step closer to justice for survivors whose most traumatic moments are disseminated online; a step closer to a world where every digital platform with an upload button is committed to the proactive detection of CSAM across all environments; and a step closer to a world where every child has the opportunity to simply be a kid.”

Stephen Balkam, Founder and CEO, Family Online Safety Institute: “We support the continued evolution of Apple’s approach to child online safety. Given the challenges parents face in protecting their kids online, it is imperative that tech companies continuously iterate and improve their safety tools to respond to new risks and actual harms.”

Former Attorney General Eric Holder: “The historic rise in the proliferation of child sexual abuse material online is a challenge that must be met by innovation from technologists. Apple’s new efforts to detect CSAM represent a major milestone, demonstrating that child safety doesn’t have to come at the cost of privacy, and is another example of Apple’s longstanding commitment to make the world a better place while consistently protecting consumer privacy.”

Former Deputy Attorney General George Terwilliger: “Apple’s announcements represent a very significant and welcome step in both empowering parents and assisting law enforcement authorities in their efforts to avoid harm to children from purveyors of CSAM. With Apple’s expanded efforts around CSAM detection and reporting, law enforcement will be able to better identify and stop those in our society who pose the greatest threat to our children.”

Benny Pinkas, professor in the Department of Computer Science in Bar Ilan University: “The Apple PSI system provides an excellent balance between privacy and utility, and will be extremely helpful in identifying CSAM content while maintaining a high level of user privacy and keeping false positives to a minimum.”

Mihir Bellare, professor in the Department of Computer Science and Engineering at UC San Diego: “Taking action to limit CSAM is a laudable step. But its implementation needs some care. Naively done, it requires scanning the photos of all iCloud users. But our photos are personal, recording events, moments and people in our lives. Users expect and desire that these remain private from Apple. Reciprocally, the database of CSAM photos should not be made public or become known to the user. Apple has found a way to detect and report CSAM offenders while respecting these privacy constraints.”

David Forsyth, Chair in Computer Science in the University of Illinois at Urbana-Champagne College of Engineering: “Apple’s approach preserves privacy better than any other I am aware of […] In my judgement this system will likely significantly increase the likelihood that people who own or traffic in [CSAM] are found; this should help protect children. Harmless users should experience minimal to no loss of privacy, because visual derivatives are revealed only if there are enough matches to CSAM pictures, and only for the images that match known CSAM pictures. The accuracy of the matching system, combined with the threshold, makes it very unlikely that pictures that are not known CSAM pictures will be revealed.”

Check out 9to5Mac on YouTube for more Apple news:

Article From & Read More ( Apple announces new protections for child safety: iMessage features, iCloud Photo scanning, more - 9to5Mac )https://ift.tt/3ipBMi0

Tecnology

Bagikan Berita Ini

0 Response to "Apple announces new protections for child safety: iMessage features, iCloud Photo scanning, more - 9to5Mac"

Post a Comment